Machines are learning to read dogs better than we do.

For decades, people have tried to understand what dogs are saying with their eyes, tails, and tiny shifts in posture. Now, artificial intelligence is getting close to doing exactly that. Researchers have begun teaching machine-learning models to recognize the emotions behind barks, ear flicks, and wagging tails—turning centuries of human guessing into measurable data. Early versions of this tech can already detect signs of excitement, fear, or stress and send alerts straight to a smartphone. The result is a new era of companionship where intuition meets algorithms in real time.

1. AI is starting to translate canine emotions with accuracy.

Using computer vision and deep learning, researchers are training AI to recognize emotional states in dogs through subtle movement patterns. According to the University of Michigan, models analyzing vocalizations and body language can now identify states such as playfulness or aggression with over seventy percent accuracy. This marks one of the first major scientific attempts to quantify what has always been instinctive communication.

What’s remarkable is how these systems interpret clusters of data—tone, motion, and micro-gestures—to form a holistic emotional profile. It’s not mind-reading, but it’s the closest science has come to understanding how emotion looks in real time through a machine’s lens.

2. Specialized algorithms are learning to decode barking patterns.

Barks once thought to be random are turning out to have structure. Researchers found that AI can distinguish between different types of vocalizations—play, warning, or distress—with consistent accuracy, as reported by Scientific American. By training on hundreds of hours of dog audio, algorithms can separate emotional nuance from noise and assign meaning to specific sound clusters.

Unlike human listeners who rely on tone alone, AI picks up minute pitch and duration changes undetectable to the ear. That microscopic detail is revealing a whole new dimension of dog communication and proving that barks aren’t as chaotic as they seem.

3. Computer vision can now interpret posture and movement.

As discovered by researchers at the University of Tokyo, machine learning systems trained on thousands of hours of dog footage can detect fine-grained changes in gait, ear position, and facial expression. These visual cues combine with audio data to form a layered emotional map. The goal isn’t just recognizing happiness or fear—it’s understanding patterns of stress or trust that unfold over time.

What makes this development so important is its use in real-world settings. AI no longer needs a lab environment; it can process video from everyday cameras, tracking dogs as they naturally behave at home or outdoors.

4. Emotion recognition tools are being built into smart collars.

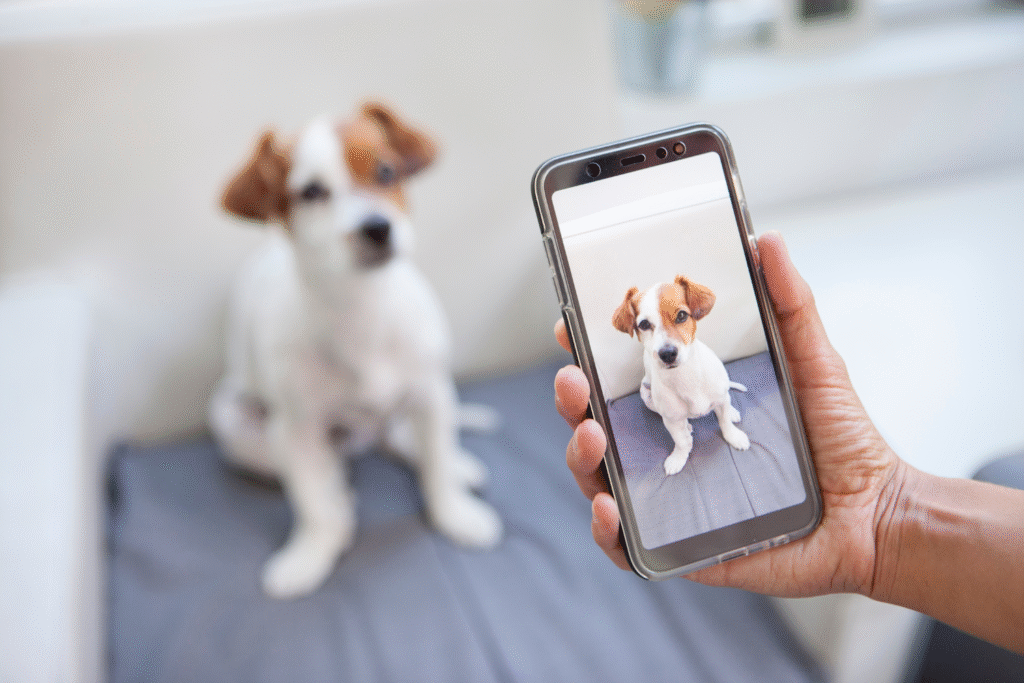

Several tech companies are integrating emotion-detecting AI into collars and harnesses. These wearables use microphones and accelerometers to monitor vocal tone, movement intensity, and body posture. The data feeds directly to mobile apps, where owners can see summaries like “your dog is anxious” or “your dog is calm.”

It’s still experimental, but the technology is improving fast. The collars can even send notifications when stress levels spike, allowing owners to intervene before behavior worsens. For many, it’s like having a silent translator that keeps watch twenty-four hours a day.

5. Machine learning thrives on individual dog profiles.

One breakthrough is personalization. Instead of assuming all dogs communicate the same way, AI models now build emotional baselines unique to each animal. Over time, they learn what “normal” looks like for a specific dog, refining accuracy as data grows.

That shift from generalization to individuality mirrors how humans bond with pets. The more context the AI gathers, the better it can interpret deviation—catching illness, discomfort, or anxiety before humans do. It’s emotional pattern recognition customized to the quirks of a single companion.

6. Veterinary applications are already emerging from this research.

Clinics are exploring AI-based analysis of patient behavior during exams or recovery. These systems track micro-movements and breathing rhythms to detect pain or distress earlier than human observation allows. Veterinarians say the data helps guide sedation, surgery, and post-operative care decisions.

Beyond clinical use, rescue shelters are also testing the technology to assess welfare. Identifying fear or aggression through behavior patterns could reduce stress in crowded environments and improve adoption matches between dogs and families.

7. Training programs are adapting AI feedback for behaviorists.

Dog trainers and animal behaviorists are starting to use AI data to refine their methods. By capturing posture and voice information, the systems show whether a dog’s stress level rises or falls during a session. Trainers can then adjust techniques in real time, basing decisions on measurable evidence instead of assumptions.

That feedback loop offers a new level of transparency between professional and pet. It also gives owners tangible proof that progress is happening, helping to bridge communication gaps that used to rely entirely on interpretation.

8. Privacy and ethics questions are now entering the conversation.

The same tools that read emotions also record large volumes of video and audio data. Researchers are debating how to store and use this information responsibly. If AI can decode behavior patterns, it might also expose data about a person’s home environment or routines.

Ethicists argue that emotional analysis should focus solely on animal welfare, not on commercial data mining. Balancing innovation with transparency is becoming as important as the technology itself. Each new capability brings new responsibility to protect both animal and owner.

9. Cultural perceptions of communication are changing worldwide.

In places where dogs were once seen primarily as workers or guards, AI-driven interpretation is reframing them as emotional communicators. Being able to measure affection or fear scientifically reshapes how societies perceive animal intelligence.

This technology is bridging cultural divides by making empathy measurable. Seeing quantifiable data that confirms emotion challenges outdated beliefs that animals act purely on instinct. The science quietly supports what many owners have always felt—that dogs truly feel, and now, machines can finally back that up.

10. The next step is merging sight, sound, and heart rate.

Developers are working on multi-sensory models that combine video, audio, and biometric sensors. Collars may soon track pulse, breathing, and tail motion simultaneously to paint a more complete emotional picture. The goal isn’t translation into words, but emotional literacy—a way for humans to see in data what dogs express in movement.

The future of companionship may not rely on language at all, but on empathy enhanced by machines. The algorithms aren’t replacing connection; they’re amplifying it, giving humans the tools to finally listen more closely to what’s been said in silence all along.